Deep-fakes: or the digital manipulation era

Technology has reached impressive levels but it also has led to new threats, among which we can find the deep-fakes as one of the major concerns. This phenomenon, which combines artificial intelligence and media manipulation, generates a great concern among diverse sectors, due to the negative consequences for society arising from it.

What are the deep-fakes?

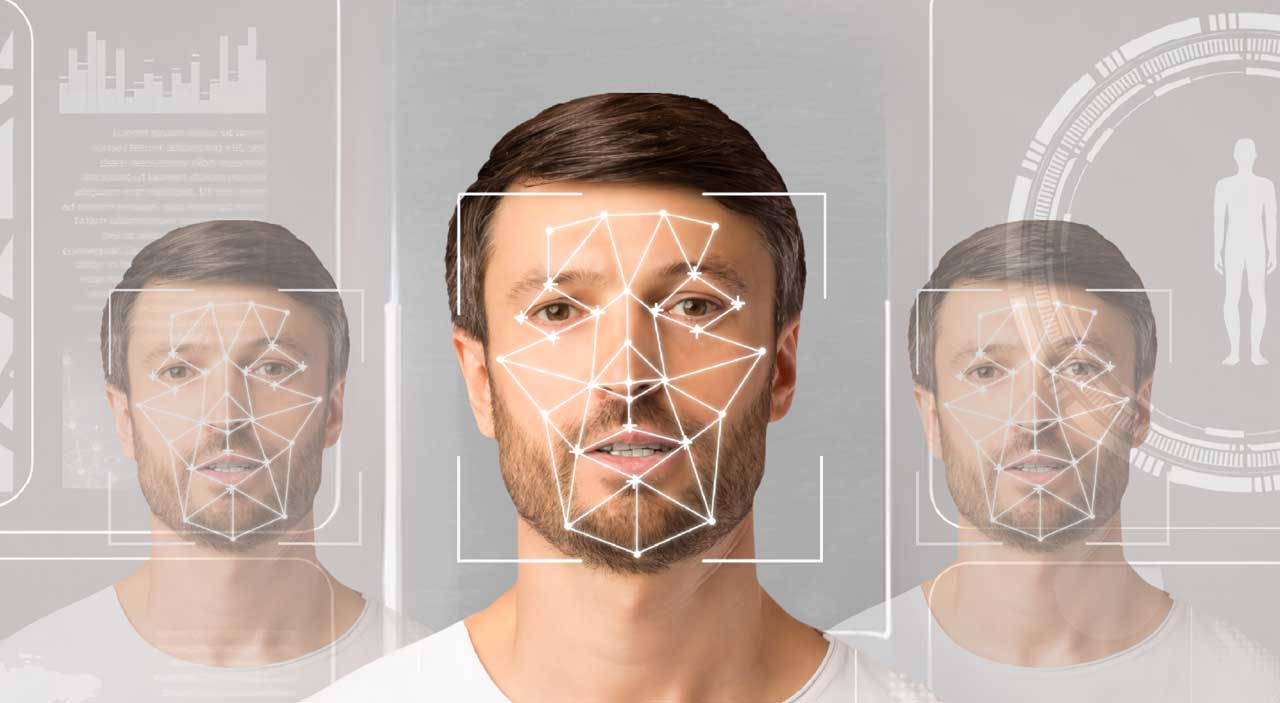

They result from artificial intelligence and they allow the creation of fake multimedia contents, mostly videos, in which there is overlap between real people’s voices and faces and fictitious contexts and bodies. These productions can look as if they were real, which makes it really difficult to distinguish between authentic and manipulated material.

Impact on society

Misinformation and Fake News: They can be used to create false statements from public figures, politicians or celebrities, spreading misleading information and generating reputation and mistrust crises.

Threat to privacy: Placing faces in compromising situations could have devastating consequences for public figures’ and ordinary individuals’ privacy and reputation

Political and social sabotage: Discourses and statements manipulation could be used for political purposes to generate social dissatisfaction.

Answers and challenges

Technological development: As artificial intelligence advances, it is crucial to develop more effective strategies to detect deep-fakes, so as to fight against this threat.

Education and awareness: It is important to inform the public about the existence of deep-fakes and their potential consequences, in order to promote awareness and take the necessary precautions.

Effective regulation

The implementation of laws and regulations approaching the malicious use of deep-fakes is essential to fight against this threat and protect society.

To sum up, it is crucial that societies, governments and companies work together to develop effective strategies that mitigate the risks and the impact associated with deep-fakes.